Explore the o4-mini-high Model by OpenAI

o4-mini-high Model by OpenAI is the premium reasoning-variant built for precision, speed-efficient throughput, and advanced multimodal intelligence.

o4-mini-high Model by OpenAI is the premium reasoning-variant built for precision, speed-efficient throughput, and advanced multimodal intelligence.

Trusted by users from 10,000+ companies

OpenAI o4-mini-high brings a much-needed balance between speed, thoughtful depth, and consistent reasoning in demanding tasks.

With the “high” variant of o4‑mini, OpenAI allocates more compute and internal reasoning per prompt, enabling more robust chain-of-thought processing and deeper context handling.

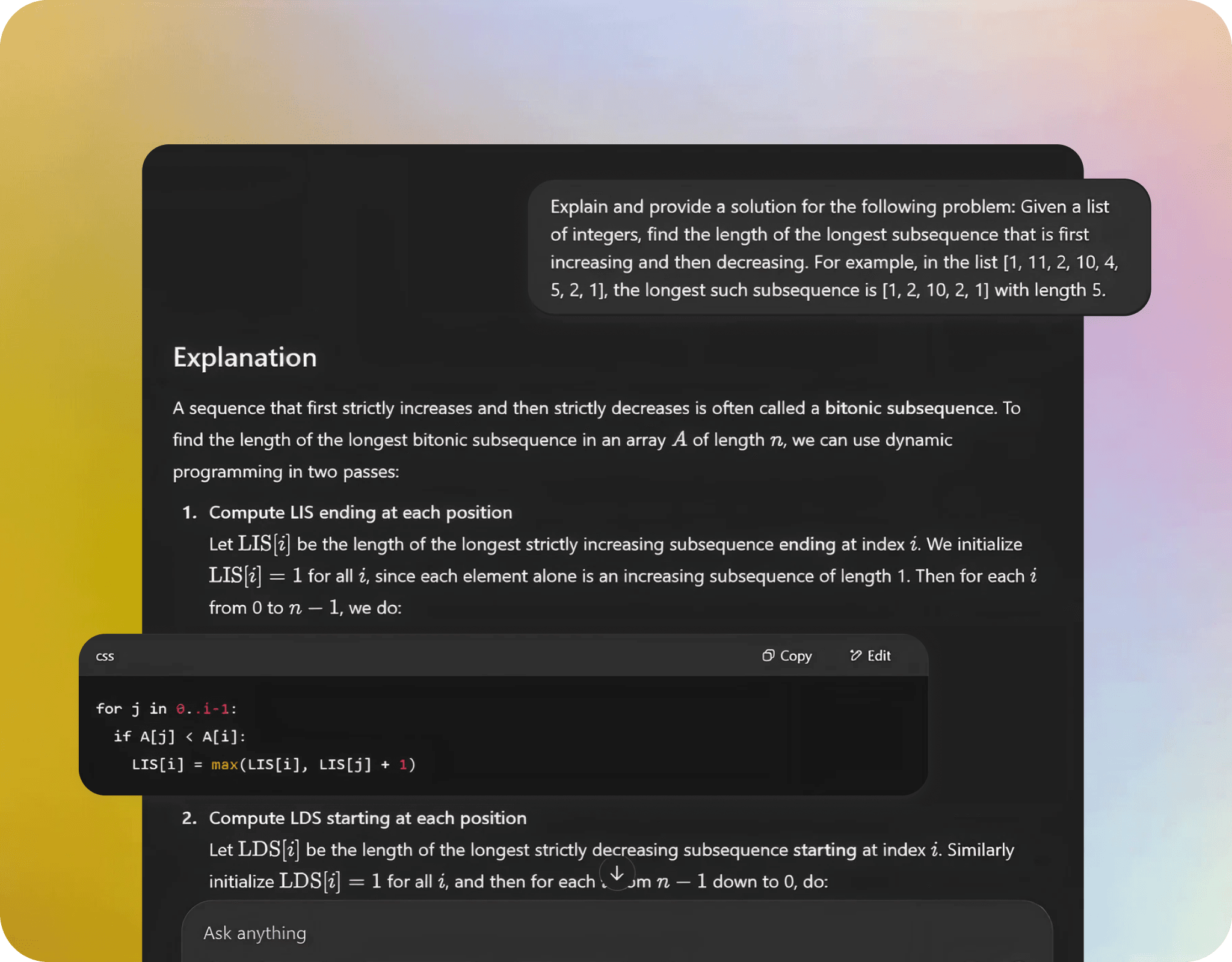

The model supports text + image input, file uploads, and tool-augmented reasoning (e.g., Python interpreter, browsing), which makes it suited for tasks that span modalities.

While the “mini” architecture brings lower latency and cost compared to full-scale models, the “high” setting trades slightly more cost for improved quality, making it a practical sweet-spot between budget and precision.

Done with generic, shallow responses? o4-mini-high model steps in when you need sharp reasoning and fresh perspectives, enabling deeper insight from layered information. It adapts to complex inputs to provide elevated outputs.

OpenAI o4 Mini High brings precision and speed in one compact model, proving that smaller can still mean capable.

Able to handle up to ~200,000 input tokens at once, enabling deep multi-document analysis, long conversations, or extensive textual workflows.

The “high” setting dedicates more computation per query, improving logic, coherence, and quality of output compared to standard mini-variants.

Built-in access to browsing, Python code execution, file upload, and image/image-manipulation tools enabling complex workflows within one model.

Processes text, images, and mixed mode (e.g., diagrams + text) in one prompt, making it versatile for visual reasoning

Designed for high-volume tasks with faster responses and better cost-efficiency than larger models, making it practical for scaled operations.

Improved ability to interpret and follow detailed user instructions, delivering answers in the desired format with fewer misunderstandings.

Underpinned by a refreshed safety training set and monitoring systems, the model meets strong refusal rates and risk-mitigation standards

Advanced logging, usage-tier policies, and audit traces for enabling organisations to monitor usage, meet regulatory requirements, and maintain AI governance.

Although offering high reasoning quality, its design aims for balanced pricing relative to full-scale models, offering a “sweet-spot” between power and efficiency.

Manage Subscription