Meet OpenAI o4-mini: A Flexible and Smarter AI Model

Explore a fast, cost-efficient reasoning model from OpenAI that delivers powerful results with minimal overhead.

Explore a fast, cost-efficient reasoning model from OpenAI that delivers powerful results with minimal overhead.

Trusted by users from 10,000+ companies

OpenAI o4-mini balances power, efficiency, and adaptability to offer flexible and diverse use cases at lower cost.

o4-mini supports the same broad range of languages as its larger counterpart, enabling reliable comprehension and generation across many scripts and cultural contexts.

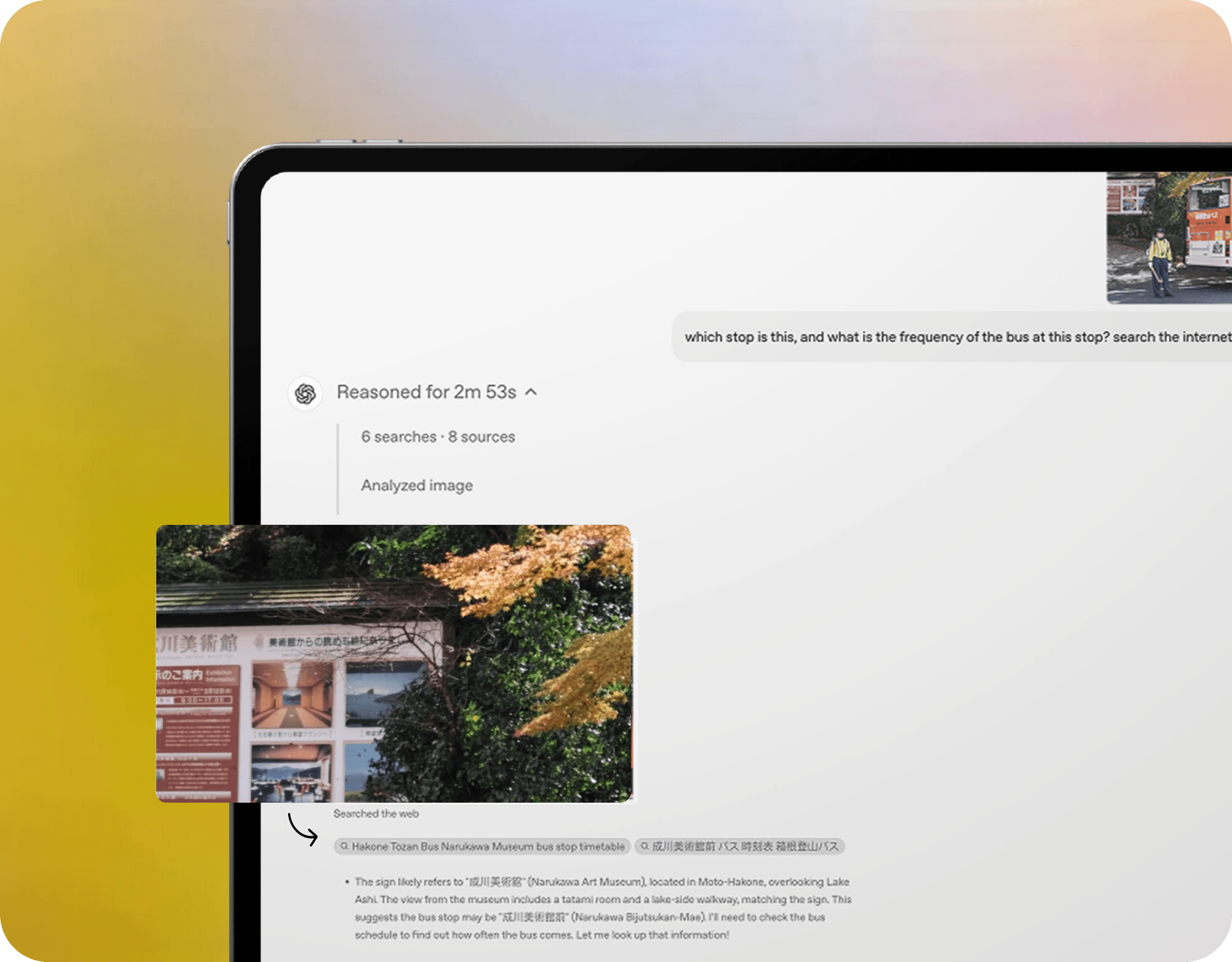

With a large-context window. o4-mini processes both text and image inputs in a unified flow, allowing it to reason about diagrams, screenshots, and complex visual-text prompts.

o4-mini was built with a revamped safety stack, including revised training data and real-time monitoring, to better handle high-risk categories like biorisk, cybersecurity, and AI self-improvement.

The model handles not just written prompts but visual, uploaded, and multi-file inputs seamlessly. It avoids disconnects between media types and sustains coherent responses even when you shift formats.

OpenAI o4-mini gives you depth, clarity and efficiency in one lean package.

Supports significantly increased throughput, allowing you to run many more requests per minute than earlier “mini” models.

Offers built-in support for structured data responses (like JSON) which simplifies downstream parsing and tool integration.

Designed with lower latency and cost in mind, making it suitable for embedded systems, lightweight agents or edge-deployed apps.

The model can be fine-tuned for custom domains or specialized tasks, unlocking tailored behaviour for specific workflows.

Includes upgraded safety training, refined refusal patterns and transparency in how it handles high-risk prompts or domains.

With support for very long input documents and large outputs (up to ~100,000 tokens), it accommodates extended workflows.

Beyond just multimodal input, the model intelligently determines when to invoke tools as part of its reasoning chain.

Engineered to reduce cost per token compared with earlier large models, making reasoning-capable AI more accessible.

Performs well not only in maths/coding domains but also shows improved accuracy in model behaviour on broader reasoning tasks.

Manage Subscription